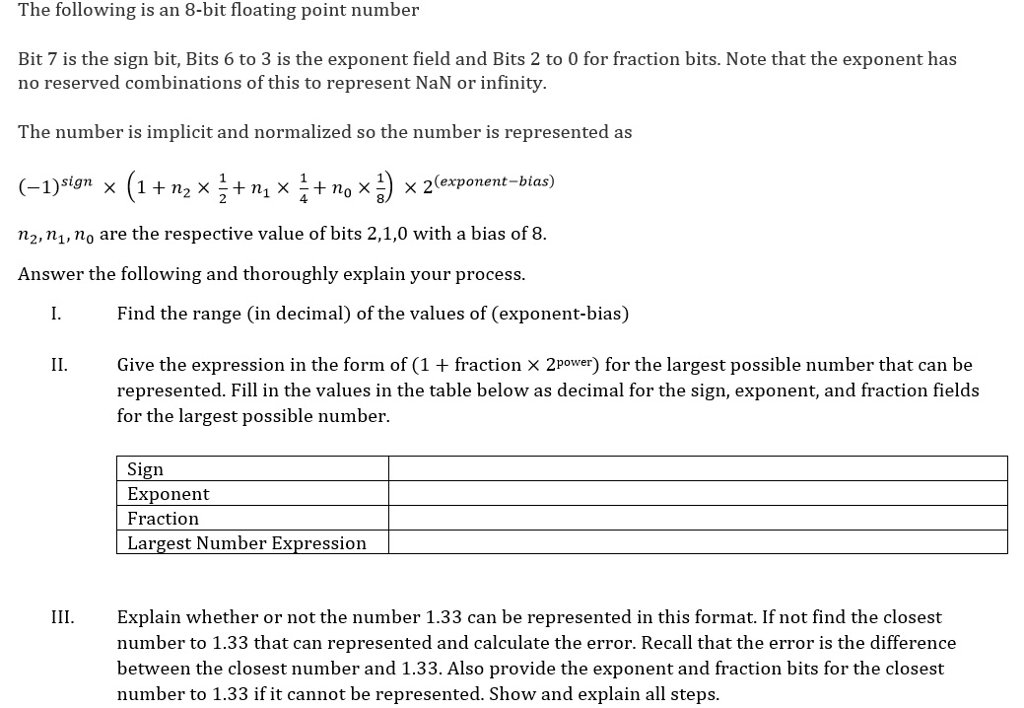

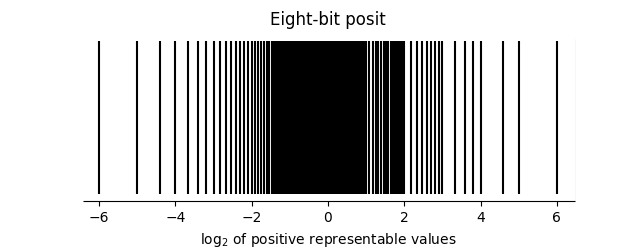

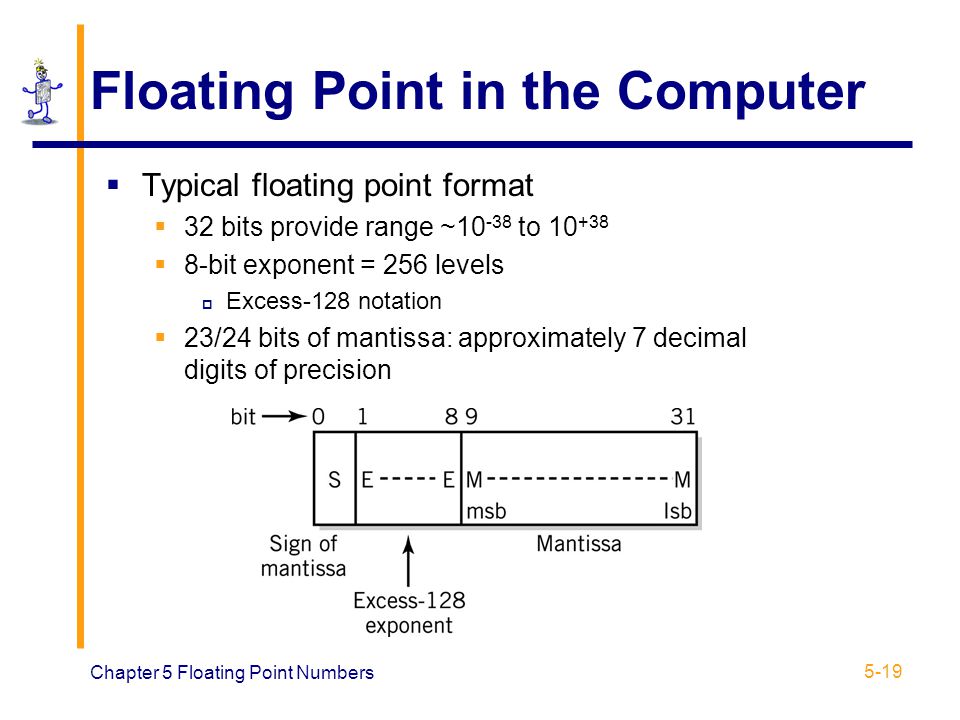

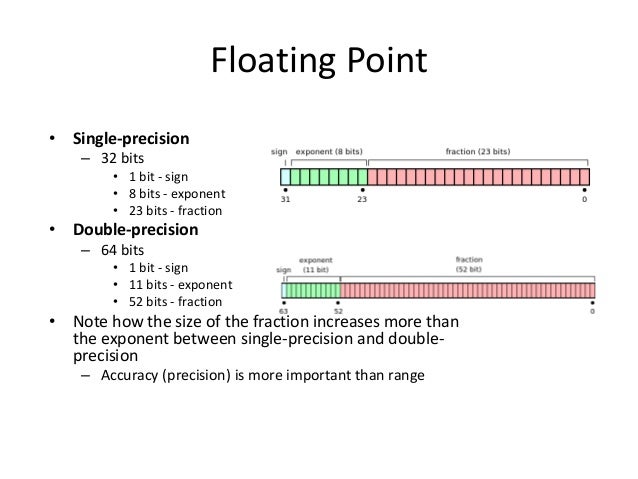

8: Floating point number machine representation. (a) 32 bit word size,... | Download Scientific Diagram

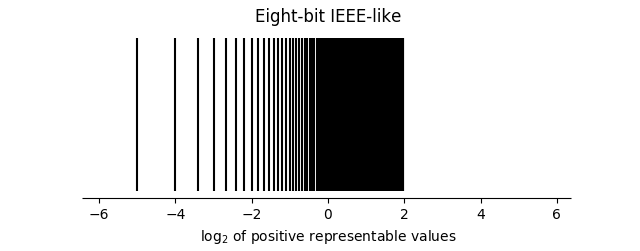

Solved] IEEE 754, Single Precision: Exponent: 8 bits Mantissa (Significand): 23 bits S EEEEEEEE MMMMMMMMMMMMMMMMMMMMMMM fp16: Half-precision IEEE Fl... | Course Hero

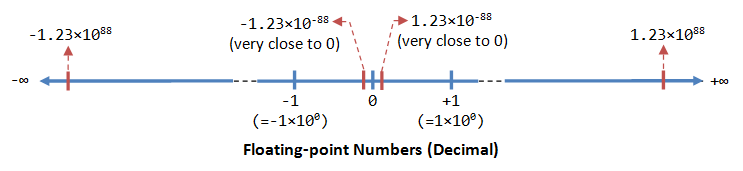

Pakiranje za staviti priznati Razočaranje double precision floating point numbers - theingenioustv.com

Speed up your TensorFlow Training with Mixed Precision on GPUs and TPUs | by Sascha Kirch | Towards Data Science

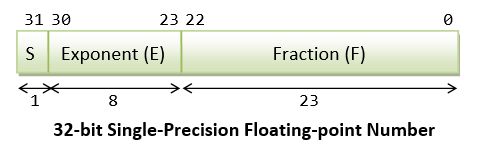

An illustration of the common floating-point formats used, including... | Download Scientific Diagram